Conquering The `aws Lambda Size Limit`: Practical Tips For Your Serverless Projects

When you're building cool things with serverless technology, understanding the `aws lambda size limit` is, you know, absolutely essential. It's like knowing the dimensions of your garage before you try to park a really big vehicle. If your Lambda function, which is basically a piece of code that runs in response to events, gets too chunky, it simply won't deploy or, perhaps, it might not run as quickly as you'd like. This can really throw a wrench into your development plans, causing delays and a bit of frustration, so it's a big deal for sure.

Amazon Web Services, or AWS, is actually the world’s most comprehensive and broadly adopted cloud platform, offering over 200 fully featured services from data centers globally. It's a huge place, with a vast array of tools, and Lambda is just one of them. You can, in some respects, discover your cloud service options with AWS as your cloud provider, with services for compute, storage, databases, networking, data lakes and analytics, and even machine learning and artificial intelligence. So, when you're working with Lambda, you're tapping into this truly massive ecosystem.

This article will guide you through the ins and outs of the `aws lambda size limit`, explaining why these limits exist and, very importantly, how you can work within them. We’ll explore practical strategies for keeping your functions lean and efficient, ensuring your serverless applications run smoothly and reliably. It's all about making sure your code fits just right, you know, without any unnecessary bulk.

Table of Contents

- Understanding AWS Lambda's Core Limits

- Why Do These Limits Exist, Anyway?

- Practical Strategies for Staying Under the `aws lambda size limit`

- When Your Lambda Grows Beyond the Standard

- Setting Up Your AWS Environment for Success

- Frequently Asked Questions About AWS Lambda Size Limits

Understanding AWS Lambda's Core Limits

When you're working with AWS Lambda, there are a few key `aws lambda size limit` numbers you really need to keep in mind, you know, right from the start. These limits are there to help ensure that the service remains responsive and efficient for everyone, so they are quite important. It's not just about deploying your code; it's also about how it runs once it's out there.

The Deployment Package Size Limit (Zipped)

The first limit you'll bump into is the size of your deployment package when it's zipped up, more or less. This package is basically all your code and its dependencies bundled together into a single file, usually a .zip archive. For a direct upload to Lambda, this zipped file has a maximum size of 50 MB. That's, you know, a pretty firm number for direct uploads. If you're uploading via an S3 bucket, that limit stretches quite a bit, up to 250 MB. So, that's a key distinction to remember, actually, as it offers a lot more wiggle room.

This zipped `aws lambda size limit` affects how you prepare your function for deployment. If your project, with all its libraries and modules, exceeds these numbers, the deployment simply won't go through. You'll get an error message telling you that your package is too big. This means you have to be pretty smart about what you include in that zipped file, basically.

The Unzipped Function Size Limit

Now, even if your zipped package is small enough, there's another `aws lambda size limit` to consider: the unzipped size of your function. When AWS Lambda takes your zipped package, it unzips it onto a temporary file system to execute your code. This unzipped size has a much larger limit, typically 250 MB. This particular limit includes everything that gets extracted: your code, your runtime, and all your dependencies, you know, everything that ends up on the disk.

This unzipped limit is, in a way, often where people run into trouble, especially with languages like Python or Node.js that can pull in many dependencies. Even if your zipped file is small, if it contains highly compressible data that expands a lot when unzipped, you could still hit this 250 MB ceiling. It's a good idea, therefore, to keep an eye on both figures when you're preparing your deployments, just to be safe.

Other Related Limits to Consider

Beyond the core `aws lambda size limit` for deployment and unzipped code, there are a few other related constraints that might affect how you build your functions, too it's almost. For instance, the `/tmp` directory, which is a temporary storage area available to your Lambda function during execution, has a limit of 512 MB. This is where your function can write temporary files, perhaps for processing data or caching, but it's not meant for persistent storage, you know. It clears after the invocation, typically.

Environment variables, which are key-value pairs you can configure for your function, also have a total size limit of 4 KB. This might seem small, but it's meant for configuration, not for large data payloads. If you try to stuff too much information into environment variables, you'll hit that limit pretty quickly, so, you know, it's something to watch out for. These smaller limits, while not directly about code size, can certainly influence your architectural choices, you know, how you design your overall solution.

Why Do These Limits Exist, Anyway?

You might wonder why AWS imposes an `aws lambda size limit` in the first place, right? It's not just to make your life harder, actually. These limits are in place for some really good reasons that have to do with how serverless functions work and how AWS manages its vast infrastructure, you know, across the globe. It's all about keeping things running smoothly and efficiently for everyone.

Performance and Cold Starts

One of the biggest reasons for the `aws lambda size limit` is performance, particularly something called "cold starts." When a Lambda function hasn't been used for a while, AWS needs to spin up a new execution environment for it. This involves downloading your code package, unzipping it, and getting everything ready to run. The larger your code package is, the longer this process takes, and that means a slower cold start for your users, more or less.

A smaller package means faster downloads and quicker setup times. AWS wants your functions to be as responsive as possible, so these limits encourage developers to keep their code lean. It’s a way of optimizing the user experience, really, by making sure functions start up without much delay. A smaller footprint just helps everything move along much faster, you know, which is a good thing.

Resource Management for AWS

Another key reason is resource management. AWS runs millions of Lambda functions for countless users. If there were no `aws lambda size limit`, people could deploy extremely large applications to Lambda, which would consume a massive amount of storage and processing resources across the AWS infrastructure. This could potentially strain the system and impact the reliability and performance for other users, you know, across the board.

By setting these limits, AWS can efficiently allocate and manage the underlying compute and storage resources. It helps maintain the stability and scalability of the service, ensuring that it can continue to offer its comprehensive range of services to everyone. It's a way of keeping the whole system balanced, in a way, and making sure there's enough room for everyone's solutions, you know, to build and scale with confidence.

Practical Strategies for Staying Under the `aws lambda size limit`

So, you know the limits. Now, how do you actually stay within them, especially when your project starts to grow? There are several really smart ways to keep your Lambda functions trim and efficient, making sure you don't hit that `aws lambda size limit` unexpectedly. It's all about being thoughtful with your code and its dependencies, actually.

Slimming Down Your Code and Dependencies

The first and most direct approach is to simply reduce what's in your deployment package. This means being very selective about your code and its dependencies. Do you really need every single library you've installed? Often, development dependencies or parts of a library that aren't used in your specific function can be excluded. For example, if you're using a large library, you might only need a small portion of it, so, you know, you can sometimes just include that part.

Tools like `webpack` for JavaScript or `zip` utilities with exclusion flags can help you bundle only the absolutely necessary files. For Python, you might use `pip install -t .` to install dependencies directly into your project folder, and then manually remove unnecessary files like test directories, documentation, or cached files before zipping. It's a bit of extra work, but it really pays off in terms of package size, you know, making a big difference.

Leveraging Lambda Layers

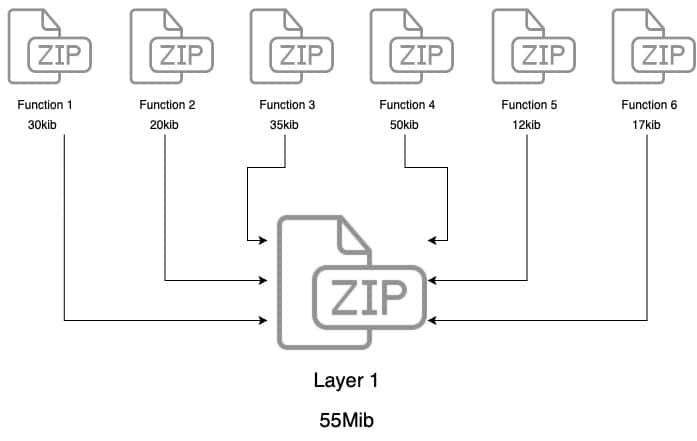

Lambda Layers are, arguably, a game-changer for managing the `aws lambda size limit`, especially with shared dependencies. A layer is a .zip file archive that contains supplementary code or data, like libraries, a custom runtime, or configuration files. You can attach up to five layers to your Lambda function, and these layers are then made available in the function's execution environment. This means you don't have to include those common dependencies in every single function's deployment package, which is pretty neat.

For instance, if you have multiple Lambda functions written in Python that all use the `requests` library, you can create one layer that contains `requests` and attach it to all those functions. This dramatically reduces the size of each individual function's deployment package, as they only need to contain their unique code. It's a very effective way to share code and keep your main function packages small, you know, quite efficient.

Using Container Images for Larger Functions

For those times when your function absolutely needs to be larger than the traditional `aws lambda size limit` allows, AWS introduced support for container images. This is a pretty significant shift. Instead of zipping up your code, you can package your Lambda function as a container image, which can be up to 10 GB in size. This includes your code, runtime, and all dependencies, so it's a huge jump in capacity, actually.

This option is perfect for functions with extensive dependencies, custom runtimes, or even machine learning models that require a lot of libraries. You build your container image using a Dockerfile, push it to Amazon Elastic Container Registry (ECR), and then configure your Lambda function to use that image. It gives you a lot more flexibility and control over your environment, you know, especially for really beefy applications.

Externalizing Data and Assets

Sometimes, your function's "size" isn't just about code, but also about static assets or large data files it needs to access. If your function needs to work with large configuration files, images, or even small databases, don't bundle them directly into your deployment package. Instead, store them externally in services like Amazon S3 or Amazon DynamoDB. Your Lambda function can then retrieve these assets at runtime, you know, as needed.

This approach keeps your `aws lambda size limit` in check because the large files aren't part of the deployment. It also offers other benefits, like easier updates to those assets without redeploying your function, and better scalability. It's a common pattern in cloud development, really, to separate your code from your data, and it works very well with Lambda, too it's almost.

When Your Lambda Grows Beyond the Standard

Even with all the tricks for managing the `aws lambda size limit`, there might be situations where your task is just too big or too complex for a single Lambda function, even with container images. In these cases, it's a good idea to think about other AWS services that are designed for larger, more distributed workloads. AWS, as you know, offers a very broad range of services, so there are always options, you know, to consider.

Considering AWS Step Functions

For orchestrating complex workflows that involve multiple steps, perhaps some of which are very resource-intensive, AWS Step Functions can be a really powerful tool. Step Functions lets you define a state machine that coordinates various AWS services, including Lambda functions, in a reliable and scalable way. You can break down a large task into smaller, manageable Lambda functions, and then use Step Functions to manage the flow between them, you know, gracefully.

This means no single Lambda function has to become overly large or try to do too much. Each function can focus on a specific part of the workflow, keeping its `aws lambda size limit` small and its purpose clear. Step Functions handles the retries, error handling, and state management, which is, honestly, a huge help for complex processes, allowing you to build and scale your solutions with confidence.

Exploring Other AWS Services for Heavy Lifting

If your workload involves very long-running processes, extremely large data processing, or tasks that require specialized hardware, Lambda might not be the best fit, even with its increased size limits. AWS offers other services that are, in some respects, better suited for these kinds of heavy lifting tasks. For example, for batch processing of large datasets, you might look at AWS Batch or Amazon EMR.

For tasks requiring persistent servers and more control over the environment, Amazon EC2 or AWS Fargate could be more appropriate. The key is to choose the right tool for the job. AWS consists of many cloud services that you can use in combinations tailored to your business or organizational needs, so there's usually a service that fits perfectly. It’s all about finding the most efficient way to get things done, you know, without forcing a square peg into a round hole.

Setting Up Your AWS Environment for Success

Before you even start worrying about the `aws lambda size limit`, it's really important to have your AWS environment set up correctly. This foundational step is, you know, where everything begins. Amazon Web Services uses access identifiers to authenticate requests to AWS and to identify the sender of a request. There are three types of identifiers available: (1) AWS access key identifiers, (2) temporary security credentials, and (3) IAM roles. Understanding these is pretty crucial for secure operations, actually.

We'll guide you through the essential steps to get your environment ready, so you can start working with AWS effectively. This includes learning how to create your AWS account and configure your development workspace. A well-configured environment means you can focus on your code and managing your `aws lambda size limit` without getting bogged down by access or setup issues. It's the groundwork that makes everything else possible, you know, a very important first step. You can learn more about AWS Lambda features on the official AWS site.

Getting your development workspace ready means having the right tools installed, like the AWS CLI, and making sure your credentials are set up securely. This way, you can easily deploy and manage your Lambda functions, keeping an eye on their size and performance. Learn more about cloud services on our site, and link to this page for guidance on creating your AWS account.

Frequently Asked Questions About AWS Lambda Size Limits

People often have a few common questions when they're thinking about the `aws lambda size limit`. It's pretty natural to wonder about these things, you know, especially when you're just starting out or running into issues. Here are some of the questions that come up a lot.

What is the maximum deployment package size for AWS Lambda?

The maximum size for your deployment package, when it's zipped, is 50 MB if you're uploading it directly through the console or CLI. However, if you upload your package to an S3 bucket and then point your Lambda function to that S3 object, the limit is much larger, up to 250 MB. So, that's a pretty important distinction, actually, for managing your `aws lambda size limit` effectively.

What is the unzipped size limit for AWS Lambda?

The unzipped size limit for your Lambda function, which includes your code and all its dependencies once extracted onto the execution environment's file system, is 250 MB. This particular limit applies regardless of whether you uploaded the zipped package directly or via S3. It's a key number to remember, as it can often be the real bottleneck for larger projects, you know, in practice.

How can I reduce my AWS Lambda function size?

There are several ways to reduce your Lambda function size. You can trim unnecessary files and modules from your deployment package, use Lambda Layers to share common dependencies across multiple functions, and consider packaging your function as a container image for much larger requirements, up to 10 GB. Also, externalizing large assets to services like S3 helps keep your function package small, you know, which is a smart move.

AWS Lambda Response Size Limit

AWS Lambda Limits - Lumigo

Mastering AWS Lambda: Size Limits, Docker Images, and Application