AWS Lambda Layer Size Limit: What You Need To Know Today

Have you ever felt like your AWS Lambda function is, like, hitting a wall, maybe? It's almost as if you're trying to squeeze a whole lot of stuff into a space that just isn't big enough. That feeling, in some respects, is what many developers experience when they bump into the limits of AWS Lambda layer sizes. It's a common challenge, you know, when you're building serverless applications.

AWS Lambda is a very powerful tool, actually, for running code without having to manage servers. It's a core part of what makes serverless computing so appealing. You can just focus on your code, and AWS handles, well, pretty much everything else. But even with all that freedom, there are boundaries, and understanding these boundaries, especially the ones for layers, is really important for building things that work well.

This article will help you get a handle on the AWS Lambda layer size limit. We'll look at what these limits mean, why they are there, and, you know, some smart ways to work within them. It's about making sure your applications can build and scale with confidence, as a matter of fact, without getting stuck because something is just too big.

Table of Contents

- Understanding AWS Lambda and Layers

- The Core of the Matter: AWS Lambda Layer Size Limits

- When Your Layers Grow Too Big: Common Challenges

- Strategies for Managing Large AWS Lambda Layers

- Monitoring and Keeping Track of Layer Size

- Frequently Asked Questions About Lambda Layer Size

Understanding AWS Lambda and Layers

What is AWS Lambda?

AWS Lambda is, you know, a service that lets you run code without having to think about servers. It's a big part of Amazon Web Services, which is a very comprehensive cloud provider. AWS offers, like, over 200 fully featured services from data centers all over the world. Lambda is one of those services, and it's used for a lot of different things, from processing data to powering web applications.

When you use Lambda, you basically upload your code, and then AWS takes care of running it when an event happens. This could be, for example, a file being added to storage, or a message coming into a queue. It's a way to build and scale your solutions with confidence, really, because you don't have to worry about the underlying infrastructure. Learn more about cloud services on our site, actually.

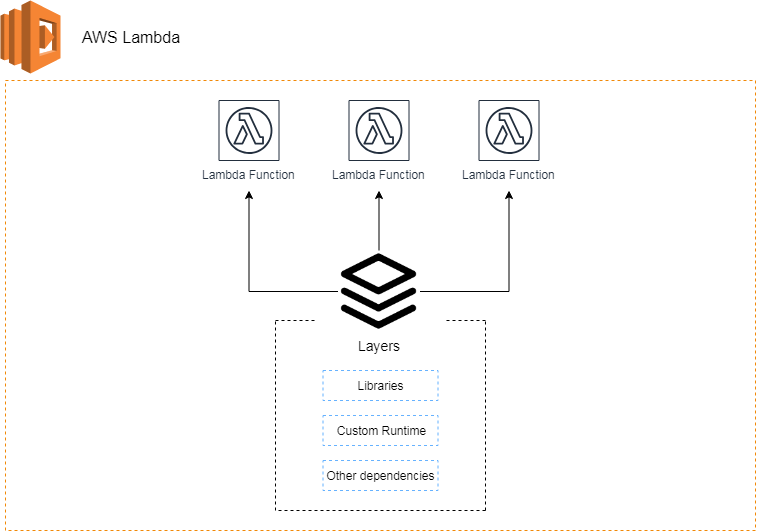

What are Lambda Layers?

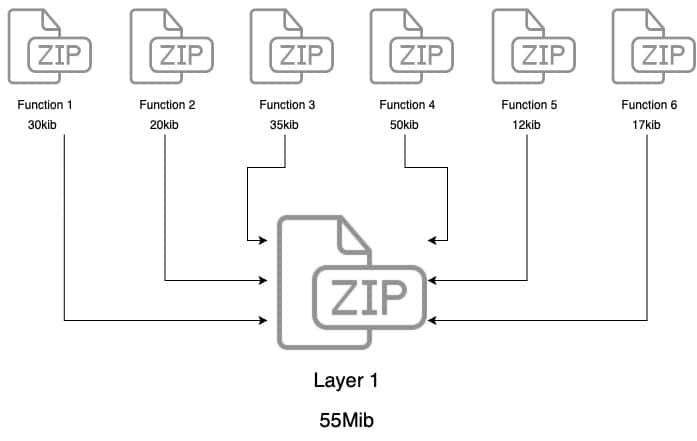

Lambda layers are, in a way, a way to manage your code dependencies. Imagine you have a few Lambda functions that all use the same set of libraries or custom runtimes. Instead of putting those libraries into each function's deployment package, you can put them into a layer. Then, all your functions can just, you know, refer to that layer.

This approach has some good points, actually. It can make your function deployment packages smaller. It can also help with code reuse, so you're not duplicating the same stuff over and over. You can, for instance, create a separate project for all your dependencies in something like a `pom.xml` file, and then put those into a layer. It's a way to keep things tidy, more or less.

The Core of the Matter: AWS Lambda Layer Size Limits

Now, let's get to the main point: the size limits. These limits are, you know, pretty important to understand because they can affect how you build and deploy your Lambda functions. AWS sets these limits for various reasons, including performance and resource management. It's just how the system works, basically.

The Main Deployment Package Limit

The most important limit to keep in mind is the total deployment package size. This package includes your Lambda function code *and* any layers you attach to it. As per the documentation, the deployment package limit is 250 MB when it's unzipped. That's about 262,144,000 bytes, which is a fairly specific number, isn't it?

This quota, you know, applies to all the files you upload. So, if you have your function code, and then you add, say, three layers, the total size of all that stuff, once it's unpacked and ready to run, cannot go over 250 MB. It's a common challenge developers encounter, as a matter of fact, when they are working with AWS Lambda.

Individual Layer Size Rules

When it comes to individual layers, there's a bit of nuance. Each layer can be up to 250 MB when it's compressed as a .zip file. This limit applies to the zipped size of the layer when you upload it to AWS. So, you can have a single layer file that is quite large, in a way, as long as it's within that 250 MB compressed limit.

However, you also have to remember the overall unzipped limit. Even if you upload a layer that's 200 MB zipped, it might expand to, say, 600 MB when unzipped. That would be a problem for the total deployment package. So, while an individual zipped layer can be 250 MB, you still need to keep the total unzipped size of your function and all layers under 250 MB. It's a bit like, you know, having a suitcase that can hold a lot, but then the airline has a total weight limit for all your luggage.

There's also a mention that the function code itself, without layers, must stay below 50 MB when zipped, and 250 MB uncompressed. This is just for the function part, not the layers. The layer limits apply to layers as well, so you have to be mindful of both, actually.

Total Layers and Their Count

You can include up to five layers per function. That's a fixed number, so you can't just keep adding more and more layers. This means you have to be smart about how you group your dependencies. If you have, say, six different sets of libraries, you might need to combine some of them into fewer layers, or, you know, rethink your approach a little.

And again, all these five layers, plus your function code, must collectively stay under that 250 MB unzipped deployment package limit. So, you know, having five layers doesn't mean you get five times 250 MB. It means five layers *contributing* to the single 250 MB total unzipped limit. It's pretty strict, in some respects.

Why These Limits Exist

These size limits are there for good reasons, you know. One big reason is performance. Smaller deployment packages mean faster cold starts. When a Lambda function runs for the first time, or after a period of inactivity, AWS needs to download and unpack your code. If that package is very large, it takes more time, and your users might experience a delay.

Another reason is resource management. AWS needs to allocate resources efficiently across its vast infrastructure. Keeping package sizes manageable helps them do that. It's about maintaining the overall health and responsiveness of the service for everyone. So, these limits are, in a way, a trade-off for the benefits of serverless computing.

When Your Layers Grow Too Big: Common Challenges

It's fairly easy to hit these size limits, especially if you're using languages with large dependency trees, like Java with many JAR files, or Python with complex libraries. It's a situation that many developers face, so you're not alone if you find your layers getting a bit chunky.

Hitting the Unzipped Wall

The 250 MB unzipped deployment package limit is, you know, the one that often catches people off guard. You might have a zipped layer that looks small enough, but then when it expands, it just blows past the limit. This happens a lot with compiled languages or frameworks that include many files, even if those files are small individually. It's like, you know, a compressed file that turns into a huge folder once you open it.

This is where monitoring your layer size and usage becomes very important. You need to know how much space your dependencies are actually taking up once they are ready to run. It's not just about the size of the file you upload, but the size of the contents inside, which is something people sometimes forget.

Dealing with Large Dependencies

What if you have a JAR file, for instance, that is more than 250 MB by itself? Well, then you can't just put that directly into a standard Lambda layer. The layer upload limit is 250 MB zipped, and if your single dependency is already bigger than that, you have a problem. This is where you need to start thinking about different approaches, actually, to manage your code. It's a bit of a puzzle, sometimes.

This challenge is particularly noticeable when you're working with, say, machine learning libraries or complex data processing tools that come with a lot of components. These tools are very powerful, but they often bring a lot of bulk with them. So, you know, you have to find a way to make them fit.

Strategies for Managing Large AWS Lambda Layers

So, what do you do when your layers are getting too big, or when you have dependencies that just won't fit? There are, thankfully, some good strategies you can use. It's about being clever with how you package and deploy your code, really.

Using S3 for Larger Layers

One of the main ways to handle very large lambda layers is to use Amazon S3. The reason you need to use S3 for publishing large lambda layers is due to size limitations imposed by AWS Lambda for direct uploads. When you try to upload a deployment package or a layer directly, you hit that 250 MB zipped limit. But S3 can store much larger files.

The process usually involves uploading your zipped layer file to an S3 bucket first. Then, when you create or update your Lambda function, you point it to the S3 object where your layer is stored, rather than uploading the layer directly. This method allows you to work with layers that are, you know, significantly larger than the direct upload limit. It's a common and very effective workaround for big files.

Optimizing Your Dependencies

Sometimes, the problem isn't that you *need* a huge dependency, but that your dependency package includes a lot of stuff you don't actually use. This is where optimization comes in. You can, for instance, try to remove unnecessary files or modules from your libraries. This practice is sometimes called "tree shaking" in some programming environments.

For Java projects, this might mean carefully selecting only the specific JAR files you need, rather than including a whole framework if you're only using a small part of it. For Python, it could involve using tools that analyze your code and only package the modules that are actually imported. It's about being very selective, more or less, with what you include.

Splitting Functionality Across Multiple Lambdas

If a single Lambda function, even with layers, is becoming too large, it might be a sign that it's doing too much. You could, you know, consider breaking down your function into smaller, more focused Lambda functions. Each of these smaller functions could then have its own set of layers, or even no layers if its dependencies are small enough.

This approach, sometimes called "single responsibility principle," can make your functions easier to manage and faster to execute. It also helps keep individual deployment packages well within the size limits. For example, if you have one function that does data ingestion, processing, and storage, you might split it into three separate functions, each with its own job. It's a good way to keep things manageable, actually, and helps to build and scale your solutions with confidence.

Monitoring and Keeping Track of Layer Size

Just like you monitor other aspects of your cloud services, keeping an eye on your Lambda layer sizes is a good idea. It's a bit like, you know, watching your car's fuel gauge. You want to know where you stand so you don't run into problems unexpectedly. Monitoring and optimizing layer performance tools for tracking layer size and usage are very helpful.

Tools for Tracking

AWS provides tools and metrics that can help you see the size of your deployment packages. When you upload a layer or a function, the AWS console will show you the size. You can also use the AWS CLI or SDKs to programmatically check these details. There are also, you know, third-party tools that can help visualize your deployment package contents, which can be very useful for finding what's taking up space.

For example, if you're working with a specific language, there might be build tools or plugins that can give you a breakdown of your package size. This kind of information is really helpful for figuring out where your biggest dependencies are, and where you might be able to make things smaller. It's about getting a clear picture, basically.

Regular Reviews

It's a good practice to, you know, regularly review your Lambda functions and their layers. Dependencies can change over time, and new versions might be larger or smaller. A library you needed six months ago might have a lighter alternative now. By doing these reviews, you can catch potential size issues before they become real problems.

This also helps ensure that you're only including what's truly necessary. Sometimes, a dependency is added for a quick fix and then just, you know, forgotten. Regular checks help you keep your environment lean and efficient. It's a part of good cloud hygiene, you could say. You can also learn how to create your AWS account and configure your development workspace, as a matter of fact, to better manage these aspects.

Frequently Asked Questions About Lambda Layer Size

What is the maximum size for an AWS Lambda layer?

Each individual layer can be up to 250 MB when compressed as a .zip file for upload. However, the total unzipped size of your Lambda function code *plus* all its attached layers cannot exceed 250 MB. So, while you can upload a large individual layer, it still has to fit within the overall unzipped limit when combined with everything else.

How many layers can an AWS Lambda function use?

An AWS Lambda function can use up to five layers. This means you can attach a maximum of five different layers to a single function. You'll need to make sure that the combined unzipped size of these five layers and your function code stays under the 250 MB total deployment package limit.

What happens if my AWS Lambda layer is too big?

If your AWS Lambda layer, or the total deployment package (function code plus all layers), exceeds the size limits, your deployment will fail. You'll typically get an error message indicating that the package size limit has been exceeded. This means your function won't be able to deploy or update until you reduce its size to fit within the allowed limits. You might, for instance, need to use S3 for very large layers or optimize your dependencies.

AWS Lambda Response Size Limit

AWS Lambda Layers | Lumigo

What is Lambda Layer and Why We Need It | by Praveen Sambu | AWS in Plain English